Sora Alpha Artists: The Next Chapter in AI Storytelling

Speakers

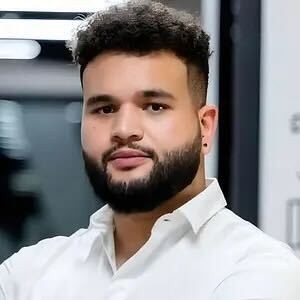

Manuel Sainsily is a TED Speaker and XR Design Instructor at McGill University and UMass Boston, who has published an episode about AI, Ethics, and the Future on Masterclass.

Originally born in Guadeloupe, a French archipelago in the Caribbean, he moved to Montreal in 2010 to finalize his Master of Science in Computer Sciences. Over a decade of experience later in various industries from Aerospatial to Gaming, and a second citizenship as Canadian, he is now a trilingual public speaker, an educator, and a multidisciplinary artist who champions the responsible use and understanding of emerging technologies such as Artificial Intelligence, Spatial Computing, Haptics, and Brain-Computer Interfaces.

From speaking at worldwide tech conferences, to receiving a Mozilla Rise25 Award, and producing events with Meta, NVIDIA, OpenAI, and VIFFest, Manuel amplifies the conversation around art and cultural content through inspiring, educative, and interactive experiences.

Venezuelan-born Will Selviz is a trailblazer in immersive media and experiential design. His diverse upbringing across Latin America, the Middle East, and Canada fuels his innovative approach to digital storytelling. A Clio Award winner and SXSW XR director, Will seamlessly blends traditional and cutting-edge technologies.

Will’s expertise brings tech adoption for industry giants like Nike, Meta, NBA, Microsoft, and OpenAI. Passionate about neuroscience and Brain-Computer Interface (BCI) technology, he aims to fuse creativity with scientific rigor. Drawing on his computer science and design background, Selviz crafts inclusive experiences that foster a sense of belonging.

SUMMARY

Will Selviz and Manuel Sainsily shared their innovative use of AI, XR, and immersive media to preserve cultural heritage, promote inclusive creativity, and explore new storytelling paradigms. Through their collaborative project “Protopica,” they demonstrated how tools like Sora, ImageGen, and GPT-4 can empower global youth, democratize access to emerging technologies, and showcase the positive impact of AI on art, culture, and education. Their work exemplifies how AI can drive shared prosperity, enhance public engagement, and foster a globally inclusive creative ecosystem.

TRANSCRIPT

Tonight, I'd like to introduce you to two of the most innovative creatives I know, Will Selviz and Manuel Sainsily, to share the powerful ways they're leveraging AI tools to preserve cultural heritage and make works of art that will move you.

Manuel Sainsily is a TEDx speaker and XR design instructor at McGill University in UMass Boston, who has published an episode about AI, ethics, and the future on Masterclass. So if you haven't checked him out on Masterclass, I encourage you all to. Originally born in Guadeloupe, a French archipelago in the Caribbean, he moved to Montreal in 2010 to finalize his Master of Science in Computer Sciences. Over a decade of experience later in various industries from aerospace to gaming and a second citizenship as a Canadian, he's now a trilingual public speaker and educator and multidisciplinary artist who champions the responsible use and understanding of emerging technologies such as artificial intelligence, spatial computing, haptics, and brain-computer interfaces.

From speaking at worldwide tech conferences to receiving a Mozilla Rise 25 award and producing events with Meta, NVIDIA, OpenAI, Vifest, Manuel amplifies the conversations around art and cultural content through inspiring educational and interactive experiences. We are so grateful to have him here tonight.

Our other speaker, Venezuelan-born Will Selviz, is a trailblazer in immersive media and the experiential design world. His diverse upbringing across Latin America, the Middle East, and Canada fuels his innovative approach to digital storytelling. A Clio Award winner and South by Southwest XR director, Will seamlessly blends traditional and cutting-edge technologies. Will's expertise bring tech adoption for industry giants like Nike, Meta, NBA, Microsoft, and OpenAI. Passionate about neuroscience and brain-computer interface technology, he aims to fuse creativity with scientific rigor. Drawing on his computer science and design background, Selviz crafts inclusive experiences for us all.

Will and Manu have also agreed to be OpenAI Forum Fellows for this season, so you'll continue to see more of them as time goes on, and I'm just so very grateful that they're here. Please help me in welcoming Manu and Will to the stage.

Hello. Hey, how's it going? Thanks for having us. Exactly. And this is our second time, I think, Will, now with the Forum doing a live, and we're really excited because there's so much that have happened since last time that we came on stage. And today we're going to try to dig a little bit deeper into what we presented last time and showcase some of the latest improvements and workflows and the tools that OpenAI have been releasing. And yeah, if you have any questions, please use the chat. Maybe one of the first one that I have is, where are you joining us from? Drop the Addy in the chat. Let us know where you're coming from. We would love to know if this is a worldwide event or if everybody is in the same room.

Where are you based, Manu?

Right now I'm in Montreal, Canada, and I'm always flying somewhere, so I might not be there next week.

Where are you?

I'm in Toronto. Not too far. I'm in Toronto. Not too far. We actually see each other once a week, so it's kind of rare to have this one-on-one. I'm very excited to do it online. But yeah, we're both based out of Canada. As you know, we have a lot to dive into, so we'll go straight into it.

Let's do it. So this is our presentation, and it's called The Next Chapter in AI Storytelling. And I think something that's pretty interesting here is to, I think, explore the different tools that we have under our tool belt and how we are trying to connect them together to expand the universe of Protopica, and we will explain what is Protopica in the meantime. So you've heard the amazing presentation introduction from Natalie. Thank you so much again. Feel free to take a screenshot right now, but we'll move on. So Will, why don't you talk to them about our ethos?

Yeah. So again, Protopica started as a community of committed and driven meaningful progress through emerging technologies under digital preservation. One of the biggest parts that I want to highlight is digital preservation. So Mano and I share a strong passion for intentionally archiving and documenting our cultures, but not just ours, but creating a blueprint for people to share that. So that's really what Protopica is about, is we treat this as an opportunity to take our separate creative careers and combine them and allow people to be a part of the journey as well. So that in this sort of conversations, or it could be in art installations or experiences, being very lucky to travel around the world doing this. And we're looking forward to, yeah, continue doing it. But in terms of like our core values and beliefs, you know, we believe in being global citizens and our duty to preserve culture, whether that's taking a 3D scan of a landscape or a landmark or something that we perceive as being valuable to our culture. So that's actually the origin of Protopica, we'll touch a little bit on that, but really is think of it as emerging technology meets digital preservation meets storytelling. So what happens when you empower people at the very granular level or from the ground up with tools and technologies, like, for example, what we're going to talk about today, like Sora, ImageGen, but more importantly, what are the repercussions or what the positive outcomes of doing so? So, yeah.

Very well said. So Protopica has had a pretty short life, but at the same time, so full. It's been only a year and a half that we've started calling this what we're doing right now, Protopica, but Will and I have met actually three years ago during an exhibition that we both did a week apart ago. So I had my exhibition in Montreal, Will has his in Toronto a week before that. And he happened to visit me in Montreal to join me at my exhibition and we connected there for the first time and we realized that we were basically doing exactly the same things. Whether it is immersive storytelling, directing films in XR, scanning the world in 3D, playing with augmented reality and so on. Will had the opportunity to have his exhibition sponsored by Microsoft and Meta and he was showcasing some very powerful Caribbean futurism inspired visuals and sounds and worlds, basically. And I had exactly the same concept literally a week apart ago, sponsored by OpenAI this time.

And all of this happened the week that Chachabitty came out in 2022, which is insane because we were already part of the DALI alpha, you know, and, you know, helping shape the tool, making sure that the training data is representative of the culture that we represent and helping OpenAI shape the tool to make it as perfect as possible before it gets publicly released. But we only had a couple weeks to play with Chachabitty before it became public. So all of this really happened pretty fast and I remember going on stage with Chachabitty talking about the water access issue that we have in Guadeloupe and writing a poem about it, which made people very touched by it. And then I ended up telling them that it was not true, that it was generated by AI, I didn't write any of it.

I don't think at the time that people really understood what that would mean for the world. And now we're two years later, two years and a half almost later, and so much has happened. And now Chachabitty is super powered with multimodality and so many more tools. And we're going to actually talk a little bit about that tonight as well.

So it all started at OCAD University where Will studied and graduated and he invited me in February last year for a talk on preserving cultural heritage. And we talked about it on stage and we were looking into a lot of different use cases around the world of how AI is being used to do that, not only from a perspective of computer vision, but also language and generative storytelling and how people are also preserving their own culture in different shape and media. Culture is not just language that is spoken, it can be also dances, food, there's so many layers. And then from February 2024 to about July 2024, we had the opportunity to join the Sora Alpha. And we helped generate, again, some ideas and concept early on, but also testing the tool and really seeing its limits.

Yeah, like user interfaces and so on, like also like the user experience as well. Yeah, actually, Will, maybe you can tell them a little bit about the difference between Sora back then and Sora now.

Yeah, like I mean, in terms of like the capabilities, it's pretty much the same. Again, the model is very similar, but in terms of how much I've expanded on it with like storyboarding, so those who are familiar with Sora, the text to, well, image to video or text to video model, but storyboarding is a big part of it, a big plus where you can create, you know, in a 10 second timeline, 5, 10, 20 second timeline, you can create different keyframes or different events. And that's something that has been beautifully shaped since the Alpha until now. You can see it sort of slowly evolving now with image generation. You can even insert image along the way of your storyboard. So I encourage you all to open it and try it. But I think that's one of the biggest features that has made it a long way since the Alpha till now that, yeah, we're both still, again, we'll show you very shortly how we've been leveraging it.

Yeah. Love that. And so, yeah, we.

basically worked on our first short film at the time together to showcase two of the main capabilities of Sora which was Remix and Blend. There's actually a video that we just released with OpenAI Sora on Instagram if you're interested you can watch it. It dives a little bit more into that particular process and if you also missed the last OpenAI forum live stream that we did with Will, you can find it on the forum. I really consider re-watching it, recommend re-watching it because there's a lot of insights in this one and the film later on achieved over 500,000 views before Sora got publicly released and then we had the opportunity thanks to the team to be also selected for Sora Selects which is a screening tour that they did around the world.

First in New York where we got selected with 10 other artists, then they did it in Los Angeles and very recently in Tokyo I think a week or two ago. So all of that was amazing also to see the diversity of different people from all around the world using the tools in their own way and tapping into their own traditional background. Some people coming from no creative background being able to also leverage these tools to tap into their creativity and other film directors that were able to expand on their universes so there's a lot to feel and to see thanks to that and I think it's a tool that is an enabler and it's very cool to also see how people are using it very differently from us for example.

Then we did VIF, Vancouver International Film Festival, a little bit later in October and we turned the short film that we had into an interactive installation using real-time visuals that were responding to brain activity. So both Will and I have experience with brain-computer interfaces and we're really interested to use EEG in that sense to let the participant not just be passively watching a story but actually interacting with it and being able to change the timeline, change the linear linearity of the story itself and we're now already working on the second version of this art installation for Sonar in Barcelona that's happening in June. So this is really exciting to us and we're really happy to showcase today some of the visuals and ideas that we're exploring right now using OpenAI tools.

But without further ado, let's watch the first short film that we did with Sora.

Imagine a space. Farther than the physical world, a liminal space where the mind can migrate and explore an infinity of possibilities. In this space, the mind can morph. And try a lot of things until they find the ideal ship somewhere else. We don't process what we do by chance, but we work meticulously. We research on a cosmic scale. The mind can examine each opportunity. And it can pacify each path it wants to develop. Do we want to give it a privilege? Or a difficulty? Choices well thought out. Because the mind can look for these experiences that will change us more deeply. In the end, it doesn't choose this incarnation. A new departure for the destination. Life never lives by accident, but by well-prepared travel. What is it supposed to give us? Always happy to re-watch this.

This is a year old now, Will.

Yeah, wow.

One of the things that I think is really cool about this is to see how far Soar has evolved. We had many different characters and ideas, and for this particular short we were tapping into the limitation of the model, which was only able to output 720p visuals and stuff like that. Also, like we said, only text-to-video, right?

Yeah, only text-to-video. There was no image input at the time.

Between 900 and 1000 plus generated screens, some of the transitions were made using Remix and Blend, like for example here when you go through the tree and everything. But again, we had really dived deep into this in the last live stream, so we'll move forward with this presentation and tap into some very more recent and new tools.

So this is the link to the last one, where we talked about our workflow, from scripting to visualizing ideas in AI, validating our culture with people around us, traditional video editing part, and the execution. And you can find it on the forum. The name was Preserving the Past and Shaping the Future.

All right, let's get to the exciting stuff now. I'll let you introduce it, Will.

Yeah, so as you know, there was a huge announcement last week that, yeah, GPT and Sora now support a brand new model called ImageGen. ImageGen is a multi-modal image generation model, which allows us to create just pretty images, but really analyze and think through very complex requests or prompting that we can do with it. So this presentation is really showing the potential that it has in combination with tools like Sora, and in our case, because we're inherently storytellers and love the idea of telling stories from around the world and so on, we really wanted to open it up for you to see our process, essentially let you into our space or our process, and take a page from it to, again, see how you would take it from here.

Actually, this on the very left, it's a poster that was created. We'll dive a little bit deeper into this, but as you know, Manu and I are constantly alpha testing some of these features, so we are allowed to iterate on this model or to work with this model to get feedback for a few bit of time ahead of the release. So that is, yeah, just a context for this, but yeah, excited to dive into the next slide.

One thing that needs to be said is that the text here is generated from the same text prompt that the image was generated with, and that's one of the particularities of 4.0 image generation is that it's really good with text and actually pretty crazy. When Will sent me this image by text, I remember the back and forth. The first version was just like almost aerial, white on black, and then he added one more iteration saying, hey, can you actually make the font a bit more tribal or tapping into those colors and so on, and then boom, that was the result.

Yeah, it's able to iterate very quickly.

Yeah, on this, we'll touch a little bit on two things, which is our process. We recently got into how to bring 3D and AR, so adventure reality, using ImageGen, so a very intuitive thing that we had as soon as we got access to it was to take all the characters that we've been generating. We have hundreds of characters and a whole, let's say like lore and bible of each tribe and thing in Protopia that we're like, let's take like five or six and start tinkering with them, and this one in particular is a Skull character, and we decided to, I think if you go on the next slide, Manu, we decided to turn him into a T-Pose action figure, so you can see the proportions are slightly different. He's got a T-Pose kind of, and for those that are not familiar with T-Pose in 3D space or 3D in general, like T-Posing is just, yeah, extending the arms like that, and usually it's very standard to being able to rig a character or control, essentially turn them into a puppet for 3D animation, so this was the reason why we went exactly with this generation that, again, one of our first use cases was to turn just a simple image.

As you can tell, ImageGen is filling the blanks from entirely, except for, you know, the head, the face, and a little bit of the torso, but it did an amazing job of even figuring out what the attire should be for the legs, for the proportions of the arms, everything that's not visible is just it doing its thing, and we did give it specific prompting to turn it into a little bit more stylized. It could have kept it just one-to-one, but we decided to go with something a little bit more, the proportions slightly different and stylized, but yeah, I have a little bit at the top with a prompt, so we told it to turn this into a collectible vinyl toy and make it a T-Pose on a white background. In this case, we removed the background for the presentation, but it is also very helpful to have it be able to define what color you want the background and so on, just for product rendering reasons, or even for VFX, you can do green screen and so on, so just to do those sort of things in the prompting side.

The green screen is actually a pretty good tip, and another thing that's interesting here is that I do believe that because of the blurry ideas of the characters in the background, the fact that he saw the sand and the water, the palm trees, kind of understood the vibe of the character, and that's why we see sandals and shorts, or even the way that the belt follows the kind of cloth that we see on the character here. I'm really impressed by how it's able to change almost the art style and the type of character while maintaining a good portion of the details, like for example here on the skin here, we can see that there's almost this wave of the white and then a little bit of a gray.

here, and that is actually translated perfectly here. Yeah, and there are, again, many iterations. We pick the one we like. But for this one, for example, the nose is slightly darker than the original. And we're like, oh, we actually like this. So it's sort of like, again, spinning clay and doing pottery with a wheel. We try to control as much, make it as malleable as possible and work with it. But sometimes you're surprised by the way it spins. And you're like, oh, I actually like this. And our process, that's why it might take 900 generations. Not because it doesn't get it right in 900 attempts, but because you keep everything off of the creative process with Gen AI in this case. Exactly. Yeah, you want to talk about this, Manu?

So obviously, a big portion of the process of me and Will is we get into a room together. And we basically try to ideate as much as we can before we sit down and actually nail down a specific process. And I think in this case, Imagine was super useful. Because as soon as we got access and we realized that we could basically ask for anything, we started asking for everything. And so initially, we were like, OK, let's do a little bit of storytelling and world building. We had all of those stories written on Notion for the past four to five years. Some individual stories that we had before we even met that we kind of merged together. And we played with ChatGPT in the past already to kind of come up with new ideas and ideate and iterate on them. But it was always from a text perspective, never visual. And although we do love DALI and for its particular use cases, when it came to photorealism, it was not the strongest model at the time. There was a lot of competition on the market and open source tools that came out as well that were really competitive. So we never shied away from using multiple tools in our process and choosing the tools for their best capability at a specific time. So there was a lot of image models used in the past year, I would say, to come up with some of the early concepts for our characters. But then bringing back all of those visuals and inputs in ChatGPT with the new model. And again, image is available in ChatGPT. That's how I think most of the world knows about it. But you also have access to it in Sora. So in Sora, you have, I say, an interface that's slightly different, a bit more visual. You can use some of the way that you're used to use Sora when it comes to remixing and things like that. The in-painting aspect is there as well. I know ChatGPT has it now too, but I do like Sora for its speed. I think I'm getting results a little bit faster and you get four or two generation per image, which you cannot really get on ChatGPT. It's one side at a time. So for those of you that have both ChatGPT and Sora, I recommend using image gen in Sora. Just give it a try. You'll see, you can iterate a little bit faster with it. And some of those concepts you can see on the right here, use find of dungeons and dragons. So my initial idea was, okay, let's try a trading card game and let's see if we're able to like get like elements and health points or some texts there. And all of the texts is actually generated again by ChatGPT, a Caribbean inspired clan, the Bone Tribe channels spiritual energy. So what we did was actually training a custom GPT on Protopica and our stories. And some of that data is already in the thread before we generate images. So ChatGPT not only taps into the input image to generate the card, but also taps into the lore. And that's how it's able to create this card with such a striking resemblance with the image, but also with content that fits the character. A couple of things I wanted to touch on that you definitely it's worth mentioning Manu is why use image gen in Sora versus ChatGPT. And one of them that we found is the speed at which we can iterate. So, Sora gives you the opportunity to create four iterations per query or per prompt or every time you prompt it. And you can have, I believe, up to five concurrent generations. So you can generate 20 images in this beat that usually you can generate with one or two in ChatGPT, which is what makes a lot of sense because ChatGPT is more of a conversation going back and forth on a specific request versus Sora is more like exploratory or you do a lot of exploration of the concepts. So we got very, like the poster that we showed earlier was actually made using ChatGPT. And it made sense because it has, again, a different interface that you can, again, both of them have editing tools and editing options, but depending on what you're trying to go for, ChatGPT has a little bit more robust of brushing and editing tools and prompting and so on. So if you're really trying to hone it into one image and do specific things, I think ChatGPT is more for that. If you're trying to explore many concepts at once, you'll find that Sora has a lot more breadth of exploration just because you can generate up to 20 images in the same amount of time. So that was something that we realized as we were creating this. And also like, let's just take a second to appreciate how perfect the text is with no typos. And most of the times, no typos, very rarely, actually, which is so strange. The first thing that we did when we tested the model was see how many attempts could it go before it had the first typo, where it kind of flipped the script because before that we had to iterate many times to get the text right in other models, but this one, we actually had to iterate many times to get it wrong. So yeah, I'll leave it at that, but it's a really cool differentiation between the two ways to access ImageGen and how that's changing our process.

Well said. And on the right here, there's another example with a toy collectible figurine, and you can see the difference between the two, right? Like, even though this is a stylized, illustrative art style, the character is doing exactly the same pose. The details are the same, but you can tell that this one feels plastic, feels 3D. The shadows are so accurate, the plastic cover on top, and also multiple texts of different fonts and different sizes saying different things, and it's all accurate. This one says collectible toy, bone mask tribe. This one is clearly like a title. Figure has a different font size. H5 plus at the bottom left looks exactly like what you will see on the package. The warning, even the icon is generated. So again, a lot of details. We have published this post on Instagram on the Protopika World Instagram. So if you wanna follow all of our exciting iterations, you can find this post on the carousel over there with a bit more details in the prompts and the caption. And then the immediate logic after that was to get to 3D. So this is actually Will's screen sharing here. So I'll let you explain.

Yeah, so image to 3D, there's multiple models online that are known for this. Again, just a simple Google search images 3D, you'll find a bunch. But in this case, we decided to take the T-pose that we showed you, run it through one of these models, and then rig it and give it an animation all within the same interface, which honestly, everything that we just mentioned does not involve opening a single 3D software whatsoever, which for me, I've been doing 3D for almost 13 years now. And it's mind blowing to me that you don't even need to own any piece of 3D software to do this at this point. We were actually iterating on our phone. Which is crazy, we don't even need the higher. Yeah, iOS allows you to be able to visualize in augmented reality, which we're gonna show in a second. But everything that we're doing, we could have easily done from our phones. Which again, just goes to show how quickly we can go from idea to conceiving an idea, to iterating on it, to creating a cinematic IP or universe, whatever you wanna call it. But at the end of the day, it's like, hey, we have all these characters that we already thought about that we put in the back burner from years ago. Why don't we dive deeper into each one of them and see what, I think it just brings everything to a whole different level of visualizing and manifesting it as a creative. So yeah, it really opened a lot of doors for us to keep building on that direction. This is actually a very good point. I consider myself a mobile creator because I spend a lot of time traveling for work. Whether I'm going into conferences or universities to teach and stuff like that, I'm always on the plane. And yes, I do have a huge laptop with a giant battery pack, which is not always fun to carry around. And even though I also have a MacBook when it's for like smaller events, like being able to tap again with your phone and just iterate quickly for an idea, it doesn't even have to finish into a project. Sometimes you just wanna have fun and ideate. And Will and I, we're not always in the same city. So we always communicate through Instagram texts or through iMessages. So being able to also do something, go from the idea to the image, to the 3D model, and then send each other a .usdz file. And then you can just put it into your environment and test it is actually mind blowing for us because now we can have this iterative back and forth collaboration from the palm of our hand.

Yeah, so a little bit deeper into this. And this is where we started to get a little bit into like the extra territory.

Like we don't have to do this, but it is available to us. In this case, using a tool like Procreate on the iPad or there's other tools as well for iPhone, which allows you to open a 3D model, paint the textures. The only thing, again, anyone with a 3D background here, like obviously these tools are currently available for image to video, sorry, image to 3D model. They're lacking a little bit on the UV mapping and topology side. So I highly recommend that you give it a little bit of love in that side before you start to paint on it, just because it's not really optimized for that. But I kind of pushed it here in this example, just to as far as I could without, you know, showing some of the flaws that I just mentioned. But again, for something that's really quickly put together, I think is really impressive. This sort of workflow, just for, because image gen came out, this is possible much quicker and much faster. Was this possible with other things? Perhaps, but with other models, perhaps, but I think the ease, or really what I mentioned is the ease of it.

Prior to this, we had to install local models or cloud models that were just very inaccessible, and the workflows was really...

Yeah, just to get from zero to one took hours and hours, and we personally didn't find that appealing, and we tried a few times, we liked it, but there's just, again, the beauty of ImageGen with Sora right now is the speed at which we can iterate and the fact that we can do it from any device, really.

And I'm saying this as a creative, really, who maximizes efficiency and time, not, you know, because OpenAI has us here today. It's generally one of the most exciting tools that we've played with, because as you can tell, we could... Like, we were done working on something and we would start the next thing just because we wanted to see what it could do, and again, we're still getting surprised.

We're filling our drives and our storage with a bunch of thousands of images and videos now. Sorry, the final result, as you can see, is like this AR, augmented reality, little dude walking. And you can see some visuals at the bottom.

So those are images, static, that we have upscaled and animated using Sora. And we're actually very interesting result there, because it made us realize, okay, there is so much characters that we have. Could we potentially expand that universe without having into those other... We had when we created our Laura and so on.

So that's where those new use cases come into place. And I know we have about five minutes before the end, so we're gonna go a little quicker for those, but we'll make sure to show the juice.

Yeah, how about we do... So in this case, the post-trail sign that Manon and I were talking about earlier, we gave chat dbt, we had a little bit of a conversation here where I was like, hey, here's these two characters that we have. Can you turn them into the protagonist of our story and create a story called Protopia of the Twin Veil? And came up with a bunch of iterations, but what's really impressive is how it kept a lot of the features and transfer the style from being this sort of like animated simplified 2D to hyper-realistic.

Usually you're more used to seeing the other way around than adding more detail. So that was impressive. And also the text, the placement, everything, just immaculate in my opinion. And yeah, you can kind of see that we have two, this is why it's multimodal that we can prompt with using images. So we use two images to show the character and then we use text to tell what to do. So the fact that it just was able to think through both and keep the gradient between the images too. It did it in such a good way with the text in the middle.

Yeah, really impressed.

Yeah, and you're able to zoom in, right? I saw like a little...

Yeah, there you go. Very impressive. Yeah, we'll do a spit round of experimentation here so we can cover it all.

Yeah, so other things that I think could be fun for those that are interested to try is that you can create comic books. Obviously you've seen a lot of ideas of people doing comic books on the internet.

In this case, we're trying to do like this earth friendly kind of poster where we took all of the characters that we generated here. So those are previous images we made together with Will. Then we brought them all in Sora, attached the four images to the prompt and then said, hey, create a comic strip with speech bubbles around the characters saying positive messages around the earth.

And the fact that he translated all of these villain because they do look like villain in terms of like the art style and like the details and the mean face and so on. And being able to keep all of those details and have those characters together, merge into one image while being converted to a different art style was actually pretty impressive to us.

And what's cool is that you can continue to do that with any art style or concept that you want.

For example, this was a Chibi toy figurine and this is not a T-pose, but you can then proceed to send that as a T-pose as an update on ChatGPTN and say, hey, create a T-pose version of this character. And if you have skills with, again, like Will said, topology or using some image to 3D tools, you could go ahead and print this little dude and then have it with a 3D printer at home and add some paint on top.

And you have yourself a toy that you created from literal just text prompts.

Yeah, and so what we did after that is to take all of those images, send them into ChatGPTN and ask it, and we use Sora as well, to expand the universe and create some more characters using other animals that we didn't play with yet.

So initially we had some very diverse ideas, but then we wanted to expand on that and add more. So what we did is generate almost 45 to 50 plus new characters, and then every time we had an image generated by ChatGPTN, we run it through Sora to generate a five second clip of that character.

So we don't have the new short ready yet, but we do have this kind of like teaser slash trailer where all of the character are back-to-back playing one after the other, just to show you the quality that you get from the ideation part, but also the quality that Sora gives by just bringing life to those characters. There is no sound, so don't feel bad.

So all of those clips have been again generated with Sora from an image that was generated by ChatGPTN, inspired by the universe and the different characters that we put into input.

Yeah, I think what's really exciting is being able to go back and forth between Sora and image gen. And in some cases we've gotten very exciting results where it reinterprets what we give it.

Yeah, Sora continues to get better in terms of filling the blanks, right? So if the camera zooms out, it generates in video as well, what the shoulder should look like, what the feet should look like. So really when you get a single image that's cropped in, there's a lot of surprises that can come out of building on it.

So as you can see, sometimes it might reinterpret what you gave it, which again, just adds more dimension to the experimentation.

But some of the shots that we got, again, with blend and remix, again, this is not edited, it's just like pieced together. It's all raw. It's just a showcase.

Yeah, and it's all raw. So essentially like comparing the first film to this, obviously there's a lot of potential for each individual clip. But again, Sora gives us the opportunity to blend things together.

So there are certain shots or characters that we can blend to morph or to act in a certain way. But again, and we didn't have to attempt that many times to get the right effect or the right look and feel. All of this was done in an afternoon on a thrill.

Okay, that was awesome, guys. I didn't expect to feel even more goosebumps than I did the first time. That was really beautiful. And I'm glad we were able to play the sound this time.

Okay, Jen Garcia says that she loves the website and your mission to empower global youth to shape digital futures through adaptive programs that evolve with rapid tech landscape. She would like to know what are some of the ways that you're doing so, empowering youth globally?

Will, you should talk about your Microsoft program.

Yeah, again, there's different touch points, whether it's through myself or Manu having different partnerships with companies or if it's initiatives. So for example, one of the personal limitations that I found early on as a 3D artist was the access to hardware.

So whether that's again, building computers and having ways to remotely access them from across the world. That's a project that I worked on for about two, three years. And again, working with Microsoft to whether it's finding, again, very granular level, but working with directly in mentoring and finding mentees within the community in Canada, Vancouver, Toronto mostly.

But most importantly for us is being more of an ad hoc team. So essentially working with, again, Manu, I know you work with the VR community as well. And we both work with Kids Who Code. I think it's a different organization name is how we worked the past two, three years.

But point being is like, we kind of just plug ourselves into different existing initiatives and support in many ways that we can, whether it's giving feedback or giving our expertise like we're doing here today.

There is one of the thing that I'm the most proud of is the Children's Cancer Research Fund that we work with James Origo. They do a monthly VR game night for youth that are impacted by childhood cancer. And so we hop on a VR headset.

I always have my Quest 3 and you can see like a bunch of VR headset behind me because I've been in the field for 15 years now. But you know, like when you get to play with this, I would say age group, you realize how much creativity they have and the lack of boundaries is so inspiring.

And sure, like they are going through really tough hardship in life, but they are always smiling. They're always sharing. They're always collaborating. And I'm actually learning more from them that I'm teaching them in those moments.

And then you bring that creativity back into your workflow. And it's just, to me, it's a reminder to stay a kid in your head and to use AI as a tool that you can have fun with as well.

And hopefully that's what we were able to showcase tonight by showing you all of these different examples that go out there and try stuff. It doesn't have to be final. It doesn't have to be used in a presentation or anything. Sometimes just a little napkin drawing can turn into a fantastic idea.

Aw, thanks guys. That was a beautiful response. And it kind of dovetails into this very different but related question. Sean Wu from Chapman University would like to know, how do you see wearable XR, AR and AI coming together to redefine the way we create and experience art in the future?

I love that. That's a great question. I think actually we can touch a little bit on wearables and brain computers.

Interfaces, which is our last project that we worked on. We're actually currently working on the V2 of that. So again, we have a lot of beliefs in how interests can change the way they experience filmmaking. So if you're, again, in our case, we have, depending on your emotions, it will display different films or different voiceovers and so on. This is a Vancouver International Film Festival. And just in general, the data that we have accessible, whether it's through the Apple Watch or through a sensor or a headband, whatever we're going to put on, could change or have some sort of feedback, which now with Gen AI is possible to generate something on the spot, whereas before it had to be pre-rendered, pre-done. So I think the convergence of that is really exciting.

Again, we're seeing brain-computer sensors or interfaces inside of VR headsets, headphones, consumer goods. So that itself could allow for creatives to be more iterative in the way that they design experiences that respond to our emotions or just respond to a whole new side of it, have a new variable to work with, essentially. So that's where Manu and I are very excited. So that's actually a great question. I don't know if you want to add to that, Manu.

Yeah, I do have some of those. In the last version of our installation, we're using the Muse headband, which is a five-channel EG signal DCI product. And you have those copper elements here that are basically connected to your forehead and can read your brain signals.

And then for the next exhibition, we're working with a different new version. This one is from Neurosity. It's called the Crown and it has a bit more channels. And basically the way that you have to see these things is that, first of all, it's non-intrusive. You don't have to put a hole in your head to make it work. It's just you put it on like a crown. Non-invasive.

Non-invasive. And we see it really like a controller. So whether it's an Apple Watch like Will said or a DCI headset or anything else, like you see them as controllers to control a game. But instead of controlling the game, in this sense, we're controlling the experience. And the experience can be visual, can be audio, in our case, multimodal, multisensory. And we're really thinking about the future of storytelling in that sense so that it's not just a passive experience where the person watching can participate, basically.

I like the way you describe it as putting it on like a crown. And I think that we're definitely gonna have to host you guys in person for a live, in real life installation that would be really fun. Actually, so a fun installation at the entrance building of the OpenAI building in San Francisco, which was kind of a live ChatGPT installation. Caitlin, actually, from the OpenAI team showed it to me. It really reminded me of what we're doing with Will because they are using ChatGPT as a conversational agent that will welcome you into that experience. And they're using the image model to generate images within this kind of dome, like a flower. And I was like, that looks exactly like our art direction. I love this so much. And I could see some of those experiences working together well. We just need to figure out when you have the time because I know you both also have multiple full-time jobs and are always traveling. So let's...

Oh, actually, that just reminds me of something, guys. Music is math. I don't know if you, I don't think you were there in person. Maybe you came for the live stream, but our friend, Tony Jabara, the head of AI at Spotify, wore a Motives headset while there was a collaboration between an AI composer from Wave AI and then classical performers, the first chair violinist in song from San Francisco Symphony, and her husband, the creative director of the Santa Cruz Symphony on the piano. And Tony's headset was being projected, like his brainwaves and how he was responding to the music was being projected for us. So all sorts of really cool interdisciplinary experiences there.

Okay, couple more questions here, and then we'll move to the live Q&A for the community members. I saw a really good one. Okay, so Jose Lizaraga, and Jose, good to see you. I think you're new to the community. Really great to have you. He's a visiting researcher and senior advisor at UC Berkeley School of Education and a part of the Algorithmic Justice League, which we all have a lot of respect for over here. His question...

Does the quick iteration of this new model mean that prompt engineering will be different? What do you think about that?

I do think so, because first of all, there is a lot of backend prompting enhancement that's happening. And that's not just because of OpenAI in particular, that model. It's been the case for a while. Most AI models in the world have some level of prompt lifting in the background, because they know that most people are not engineers or are not prompt engineers per se, and that they need to understand the input to basically give the best output, especially when you have paying models and people are counting on their credits. You don't want to have them iterate thousands of times until they get what they mean. But when it comes to image and in particular, I think OpenAI is doing an excellent job at capturing the essence of what the person wants. And not just from the source of the text, but from everything else, right? Like if you're using memory in ChatGPT, there's some of that data that is also taken into account. If you're into Sora, the image, as much as the prompt and the text makes sense. And then there is a level at the storyboard level, if you're using Sora, where the image that is inputted is translated into a prompt in the backend. And it's almost like a way to translate the image into a prompt back into a prompt. And those details that you see visually that someone could pick up and start listing on a sheet are apparent to you.

And so now you have the desire, the opportunity, sorry, to either edit that text that is generated or keep it or add to it. And so I do think that you don't need as much experience as a prompt engineer to be able to get great results nowadays. First of all, because the model just gives amazing results by default. But on top of that, because it's more of a conversation. So you use your natural language the same way you would talk to an artist on your team. And if you're used to working collaboratively with other humans, you will work with AI the same way by just talking on your edits. And I feel like that's a much more natural way to, like Will said earlier, if you have a more linear specific workflow, you'll be on ChatGPT back and forth. If you're on SORA, it's more iterative and more exploratory.

I would say like... I just quickly add to that, one of the biggest things I changed for prompting for us is like when you combine the capability of having an LLM attached to this way of prompting the image model, is that we have attached PDFs and told it to generate like an infographic of like what this whole thing means, right? Like it could be like a 50 page PDF. But if you have a single image that sort of parses through that, I think it really shows that, yeah, we've come really far in terms of how you can prompt it. And that whole dynamic has changed a lot. There is a prompt example that I did just to see the capability of the model. Where I said, generate the table of elements in alchemy and to have within chemistry to have all of the different elements on one table. But then I asked it, turn the image of the thumbnail of each of those elements into an object that is made out of that element. And ChatGPT accurately did it. Like I had the 50 plus images intricately put into a table with their texts properly written under each. So I'm thinking of all of the use cases for education, for schools. My fiancé is a teacher, so I already gave the tool to her. And I was like, hey, if you're working with a specific team, like you have now access to this model that can help you generate infographics, like Will said, for your projects. And that's just enabling so much more potential in the world, I think.

Thank you so much, fellas. Because of all of this amazing Q&A here, now we're running a little bit behind. So we're gonna go ahead and wrap it up here. For those of you who have already completed your profile and your full members of the forum, we'll see you in the live Q&A for which we'll have about 10 or 15 minutes. And I'll just leave you with some closing remarks here for now. Thank you everybody for joining us, especially Will and Manu. Honestly, it's just such a privilege to host these fellas. And I really hope that we can think about a really cool creative installation to host them in the future in real life and invite all of you.

So on the horizon, on April 9th, we have another event. It's called Leading Impactful ChatGPT Trainings for those enthusiasts that are working to support your colleagues and friends adopt the tool. If you're the person in your job or in your field that's working on this, if you're the person in your job or in your friend group that's the ChatGPT user, what do they call us power users? Then I would 100% show up for this. The training will be taught by Lois Newman. It'll be live and Lois is an amazing technologist. You can see a lot of her previous trainings in the content tab in the forum in the playlist called ChatGPT Trainings. And she'll definitely help you and your team get the most of the ChatGPT tool and teach you how to use it really well.

We also have a lot coming up on the horizon. Please check your emails. When we send one coming from the OpenAI forum, we always have the registration button there for you. We have a newsletter coming out on the horizon. We'll be sharing some recordings and an essay from AI economics that we hosted in person back in March. And we will see you all very soon. Thanks for joining us tonight.