Sora Alpha Artists: Preserving the Past and Shaping the Future

Speakers

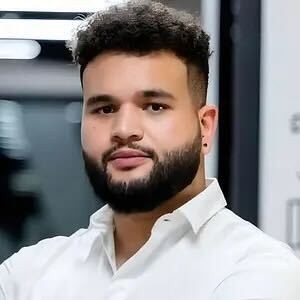

Venezuelan-born Will Selviz is a trailblazer in immersive media and experiential design. His diverse upbringing across Latin America, the Middle East, and Canada fuels his innovative approach to digital storytelling. A Clio Award winner and SXSW XR director, Will seamlessly blends traditional and cutting-edge technologies.

Will’s expertise brings tech adoption for industry giants like Nike, Meta, NBA, Microsoft, and OpenAI. Passionate about neuroscience and Brain-Computer Interface (BCI) technology, he aims to fuse creativity with scientific rigor. Drawing on his computer science and design background, Selviz crafts inclusive experiences that foster a sense of belonging.

Manuel Sainsily is a TED Speaker and XR Design Instructor at McGill University and UMass Boston, who has published an episode about AI, Ethics, and the Future on Masterclass.

Originally born in Guadeloupe, a French archipelago in the Caribbean, he moved to Montreal in 2010 to finalize his Master of Science in Computer Sciences. Over a decade of experience later in various industries from Aerospatial to Gaming, and a second citizenship as Canadian, he is now a trilingual public speaker, an educator, and a multidisciplinary artist who champions the responsible use and understanding of emerging technologies such as Artificial Intelligence, Spatial Computing, Haptics, and Brain-Computer Interfaces.

From speaking at worldwide tech conferences, to receiving a Mozilla Rise25 Award, and producing events with Meta, NVIDIA, OpenAI, and VIFFest, Manuel amplifies the conversation around art and cultural content through inspiring, educative, and interactive experiences.

SUMMARY

At the OpenAI Forum event, artists and technologists Will Selviz and Manuel Sainsily explored how AI can empower creatives, democratize storytelling, and preserve cultural heritage. The discussion centered on their short film, Protopica, which was created using OpenAI’s generative video model, Sora, and was selected for Sora Selects in New York. Their work demonstrates how AI can be used as a creative tool, not a replacement, allowing for innovative storytelling while ensuring cultural narratives remain authentic and accessible.

Selviz and Sainsily highlighted the urgent need for cultural preservation, especially for communities facing displacement, language erosion, and the loss of traditional artifacts. They noted that a language disappears every two weeks, raising concerns about the preservation of oral traditions and indigenous knowledge. AI offers new ways to document and share these histories.

For instance, Sainsily, who hails from Guadeloupe, emphasized that his native Creole language is spoken by fewer than half a million people and has limited digital representation. He demonstrated how AI can help preserve underrepresented languages by training models on poetry, literature, and oral history. He also experimented with Guadeloupean Creole voiceovers in Protopica to showcase the potential of AI-driven language preservation.

Selviz, a Venezuelan-born digital artist, spoke about using AI-powered 3D scanning, spatial computing, and generative models to archive artifacts, from traditional Venezuelan masks to Kuwaiti weaving patterns. AI allows communities to digitally document their culture without relying solely on institutions, making preservation more accessible and democratized.

TRANSCRIPT

I'm Natalie Cone, your OpenAI Forum Community Architect. I like to start all of our talks by reminding us of OpenAI's mission, which is to ensure that artificial general intelligence, AGI, by which we mean highly autonomous systems that outperform humans at most economically valuable work, benefits all of humanity.

Tonight marks a new era in the OpenAI Forum, where we're going to place more emphasis on human creativity and cultural production. How better to introduce this theme than with the artists who have collaborated with OpenAI technology from the start, Will Selviz and Manuel Sainsily.

Will Selviz is the Director of Operations at Partner at Rendered Media. He's Venezuelan born. He's a trailblazer in immersive media and experiential design. His diverse upbringing across Latin America, the Middle East, and Canada fuels his innovative approach to digital storytelling. A Clio Award winner and South by Southwest XR Director, Will seamlessly blends traditional and cutting edge technologies. Will has collaborated on tech adoption initiatives with industry giants like Nike, Meta, NBA, Microsoft, and OpenAI. Passionate about neuroscience and brain computer interface, BCI technology, he aims to fuse creativity with scientific rigor. Drawing on his computer science and design background, Selviz crafts inclusive experiences that foster a sense of belonging.

Originally born in Guadalupe, Manuel Sainsily, CEO and Global Voice on AI Ethics at Manu Vision Inc. Manu Sainsily is a TED speaker and XR design instructor at McGill University and UMass Boston who has published an episode about AI ethics and the future on Masterclass. Get it, Manu? Originally born in Guadalupe, a French archipelago in the Caribbean, he moved to Montreal in 2010 to finalize his Master of Science in Computer Sciences. Over a decade of experience later in various industries from aero-spatial to gaming and a second citizenship as Canadian, he is now a trilingual public speaker, an educator, and a multidisciplinary artist who champions the responsible use and understanding of emerging technologies such as artificial intelligence, spatial computing, haptics, and brain computer interfaces. From speaking at worldwide tech conferences to receiving a Mozilla Rise 25 award and producing events with Meta, NVIDIA, and yours truly, OpenAI, VivFest Manu amplifies the conversations around art and cultural content through inspiring, educative, and interactive experiences.

Please help me welcome Will and Manu to the stage.

Thank you so much, Natalie.

Thank you, Natalie. Welcome, fellows. And I would also love to mention that for the next three months, Will and Manu are also OpenAI Forum Fellows. So Caitlin and I are going to be collaborating with them on events, on community building, on what sorts of creative, like human creativity forward content we should be producing because they're the experts. So good to have you guys. And I'm going to hand the mic over to you, get out of your way, and I'll be back to help facilitate the Q&A.

Thanks for having us.

Thanks, Natalie. Yeah. I'm going to start by sharing my screen so that me and Will can present our slides. And as Natalie says, this is a very intimate experience with the OpenAI Forum folks. The goal is to talk to you, but also we have a big portion of Q&A at the end. We want you to use the Q&A tab. And don't hesitate to ask questions in the chat. We'll be reading and trying to answer all of your questions.

Yeah. So first of all, thank you, Natalie, for the great introduction. First of all, that saves us one slide. So today we're going to be talking about preserving the past and shaping the future. And what you're seeing right now is the poster that we made for Protopica, which is our short film that got selected for Sora Selects in New York a couple weeks ago, which was an incredible event by OpenAI. We're extremely grateful to be collaborating again with them. And today our goal is to talk a little bit more about, you know, what is Protopica? What did we try to achieve with this project? And giving you a lot of insights also from our work with Sora and the process behind and so on. So yours truly, Manuel and Will. You can call me Manu. And without further ado, Will, why don't you introduce them to our ethos?

Yeah. So Protopica started as a project, collaborative project between Manuel and I. Again, having a lot of parallels in our mission and having independent work that overlap over the span of five years in cultural preservation and technology. But our mission is to guide by intentionality, innovate, get practical and futuristic in grounded approaches that we can apply to preserve our culture. So in Manu's case, he was facing a lot from the island of Guadalupe that there was a lot of erosion in the land and a lot of natural events that were disappearing, a lot of landmarks in his island every time he visited. And in my case, I've been abroad, away from my homeland for about 13 years. So the only way that we've been able to showcase our culture is using digital tools. So this started a lot of, again, I'm summarizing a lot of them on this slide, but essentially is sort of what we're seeing in a diaspora and in the future of mass migration, different events happening in the world and how people can proactively leverage artificial intelligence and different tools are converging to be able to preserve history, both in the spatial computing and also in the entertainment and media arts and how they intersect. Well said, and I think a lot of that blending happened, you know, past experiences individually and like Will said, when we met, we realized that we had been working for most five years on a very parallel lane where all of his background is in art, 3D design, XR, AI, and I was doing the same thing. And when we met, it was just match made in heaven. Like, who are you? The Spider-Man meme. And we just started working together and it all started with this amazing talk that we gave at OCAD University, where Will graduated from, which is a very prestigious university in Toronto. For those in Ontario, you know what it is. Its a design school and its about architecture as well. And there is so much history with that university and being able to be there was amazing. But initially, we didn't plan to talk about artificial intelligence from a place of art, but only from a place of cultural preservation, which is really at the core of what we do. And we were interested to think about how that can help humanity, which is very aligned with OpenAI's missions. So let's talk a little bit more about that. What is cultural heritage preservation and how can emerging technologies help us? So what you're seeing right there, Will, can you describe some of those gifts?

Yeah, so this is a little bit of a showcase. Again, prior to Manu and I collaborating on Protopica, we had our own practices leveraging, in this case, a lot of gush and splats and nerves, which, again, are very entry-level use case. But at the same time, having traveled to different parts, again, as Natalie mentioned, I grew up in Kuwait in the Middle East. So having the opportunity to introduce this technology to different places around the world that now can leverage to document and be able to showcase, or in this case, the one that you're seeing at the top right with the basketball and the net is just something very traditional, a pattern of waving in Kuwait. So that was, again, just a little example of something much larger that was showcased in a museum over there. But yeah, for example, at the bottom, it's a very traditional Venezuelan mask. That's a tradition that's over 400 years old. And leveraging holographic display and 3D painting and 3D sculpting, there was other exchanges that were done or cultural exchanges that were done digitally, which, again, are very inception moments. I think this sl...

Certainly! Here is the transcript with appropriate line breaks between speakers:

technology evolved, Nerfs came out. A lot of people started talking about those neural radiant fields, Nerf. And Nerf, it was a capture that was based on solely just a video input or a couple images, but without truly the need to recompose or clean everything back. And it was also more, less, I would say, hard to consume on lower end devices, which helped people to capture content in a very high quality output on devices like, you know, VR headset that are more small.

And so a lot of the work that Will and I did in the past was trying to leverage cultural preservation, thinking about how can we preserve digitally things that we see around us? And how can we empower anyone with a phone in their pocket to also kind of become agents of that preservation? Because it's not the work of just historians and scientists, like every one of us come from different places of the world, different backgrounds and cultures, and we can all do kind of our part. So in our exhibitions, you will find some of those artifacts and be able to manipulate them, play with them in 3D, or just consume them and use them in different contexts. And that leads to kind of the visuals that you see totally at the right of the screen, which was the title of my exhibition called Caribbean Futurism, which draws from Afrofuturism, but from the lens of the multidiversity that is the Caribbean, you know, zone.

And in that visual, it's a dancer from Guadeloupe, actually, that is an amazing dancer. And we used very early stage image and video models in AI to be able to kind of overlay some visual on top of her. So there's always this mix of traditional art and digital art in our work. And I think that translates very well to what we did with Sora after that.

All right. Will, why don't you introduce us to this slide?

Yeah, this is a slide that we come back to very often, because actually, Manu, you can speak a little bit better on the language aspect, because yeah, let's just start there.

Let's start there. So in the talk that we did at OCAD, this slide that you're seeing right now was some of those elements where we used very kind of daunting and scary metric that we learned two years ago with Will, which was a language dies every two weeks. So first of all, what does that mean? And that sounds scary to me.

And one of the first things that we ask ourselves is how many languages are there? How many are left? If there is one that disappears every two weeks, that sounds like a very rapid and urgent problem to address, right? So there's this project from Microsoft Research called Elora, which is about enabling low resource languages. And they had this infographics and all of this data shared in 2023 about language statuses.

So there is about 7,000 living languages in the world. And most of them, obviously, are spoken by a very small amount of people. And just a few of them, like English, French, Spanish, Chinese, is spoken by a very large amount of people. So obviously, a lot of the languages and the culture that is connected to that language disappear when people die from those smaller cultures. And there was actually the story of that lady, the Boa Island, which was the last person connected to her language. She was the last person on earth speaking her own language in the early 2000s when she passed away. Her entire culture disappeared with her.

And there was not enough work and research done on her person to be able to reconstruct a lot of what disappears with her. So that feeling and that realization made us think, what about our cultures? Could that happen to Canadian culture? Could that happen to American culture? Could that happen to Guadeloupean, Venezuelan culture? And what can we do with the tools that we have today and not wait for tomorrow's advancements to be able to start doing something about it? And that is why, for us, digitally representing things is a way to save it, in a way. It's a way to preserve it.

Similar to the Zwaard, this kind of vault that exists in Norway, I think, where they save all of the seeds from the different fruits and vegetables to be able to, in the future, be able to clone bananas because we lost the taste of original bananas from 100 years ago. Well, we thought the same. We were like, hey, this mask that we'll scan in Venezuela 13 years ago, eventually you will not be able to find artifacts like that. And if we were able to create these museums, this kind of digital vault of all of our cultures combined and empower other people being inspired by this process to do the same for their culture, then eventually we'll be able to preserve it. And obviously, the language side comes with AI very quickly. And when I did my TED Talk in 2022 back in Guadeloupe, I was live on stage using ChatGPT, and he spoke Guadeloupean Creole, even though Guadeloupean Creole is not really that available on the internet. It's not something that could have been really well trained on. It was not perfect, but it was something that made me think, hey, I could maybe create a custom GPT around a knowledge base of poets, writers, people that have perfect writing and grammar from Guadeloupean Creole and preserve my language behind this custom GPT. And that's one step towards doing it.

If you want to add to that, Will.

No, I think you addressed most of it. We'll talk a little bit about how that comes into play with the film as well, but I think it's in a later slide. Perfect. So let's move on. So as we mentioned, Protopica had a bit of an evolution. And again, it all started with an OCAD University talk that we were invited to about a year ago. Actually, we celebrated your anniversary last week. And again, a lot of this came from inspiration about different perspectives. Mano and I are both world builders, storytellers, and also have a technical background in computer science. But a lot of what we're also like our ethics and our ethos when it comes to storytelling is not necessarily being clinging into utopia or dystopia. And we came across a definition of a protopia, which kind of leans more in the idea that things can be proactively worked. Then attainable as a utopia. So the whole idea of a protopia to us, maybe like four or five years ago, became something that we were already drawing towards. So when it came to coming with theme, coming up with a place, we're thinking of Protopica as this series of almost like an anthology of films that we're shaping to tell stories from different places around the world.

In a way that, again, just creates a bigger picture of what's the stories are possible to be told with using generative AI. So in this case, again, we created the film with open AI. So we were invited amongst the first 10 artists to have access to the alpha version of Sora about a year ago. And almost immediately, we started working on this. And about six months later, it was polished using all the official social platforms from open AI. And very recently, actually, less than two weeks ago, we were, as Mena mentioned, in New York for the Sora Selects event, which was a public screening at the Metrograph Theater, recognized theater in New York, which super highly received, very excited, super grateful for the opportunity from opening as well. But just a little backtracking a little bit to the original idea of Protopia, from opening as well.

But just a little backtracking a little bit to October last year. Actually, I missed that part. But we were also invited to the Vancouver International Film Festival, where we exhibited, we actually did something a little different, which was having an experiential component to the film, and having a film screen as well at the festival. So people could experience the film using EEG technology. So brainwave technology, connected to the computer, we'll dive a little bit more into that later. But we also are thinking in ways that it's not just watching a film, but also experiencing the film and having bioavailable data or feedback while you're watching the film.

So yeah, we'll touch on that shortly. Perfect. So we do have the short film that we were intended to play with you guys. But because we have some audio issue, and we don't want to spoil you, we will be sharing the link at the end so you can watch it. It's a minute and a half, so it's not too long, you can literally watch it at the end of the talk. But we might show some snippets of it to kind of put emphasis of some aspects of the process later on.

So in terms of workflow, this is basically what we went through from ideation to execution. And we're going to try to explain a little bit of each of those steps right now. And the idea here is to also show how this is still very close to the traditional workflow of creating a film. But it does introduce a lot of flexibility in the post processing, which is, again, a little bit of my specialties in VFX, 3D animation, and editing. So we'll talk a little bit about that. But also in the Q&A, feel free to drop any specific questions you have about our process as well.

Manuel, do you want to talk a little bit about the ideation?

Yeah, so my background is in product design, 15 years having been working with, you know, IBM and companies like that, building interfaces for so long. And design thinking workshops has actually really changed the way that I approach brainstorming and collaboration. And Will also has a background in design from OCAD. And when we started thinking about how we would go about even just writing a script together, first of all, we were not living in the same city, although Will ended up...

moving to Montreal for a while, we were mostly working digitally or remotely together. And we thought about using a very large canvas, like those whiteboarding apps that exist around the world to just dump all of the ideas that we have and having kind of like free flow, infinite mind mapping canvas for us to think about.

And we started with a core question, which was what if our lives result from choices made long before our existence? And so obviously this drives from the folklore of the Caribbean that are really thinking about aspects like reincarnation or other more meditative practices and things like that.

And we wanted the story to be something that will resonate with anyone in the world. So the concept of home, the concept of moving and being displaced was really important for us to express. And using a movie to express this idea was perfect. But we didn't want to have AI directing the script or directing how we should showcase everything. So we actually started from the themes and even from an audio format.

For us coming up with the idea of the sound and how the story cadence will go and the rhythm of it was more important than what the visual will look like. And to be fair, there could have been thousands of different versions of Protopica based off the randomness and allowing the AI to also takes place in the process.

So we really tried to merge our Caribbean futurism as approach from our exhibitions in the past and Latin American influences with a global perspective.

And yeah, and from the get-go, we knew that this could evolve beyond a short film. We had idea of making a video games, of writing a short story or doing like a cartoon or comic books and expanding that universe on very multiple mediums and as multidimensional artists, that's also a thing that we always think about like our art doesn't just express itself in 2D, but this first draft was really perfect for us and using this tool, being able to kind of showcase the different visuals that we had in the first images that came out was really helpful for us to arrange our ideas.

I'm also keeping a little bit of an eye on time. So I'll get through some of these as well. But in terms of scripting and narrative structure, so we actually took a different approach and I highly recommend this, especially with generative video. We started with audio first.

So in this case, we had a very strong script that we worked on for days, if not weeks that we refined and we wanted to, we had a very specific message we wanted to tell that felt universal.

So in our case, we did an English first, we edited the entire film using this voiceover audio and then at the very end, we actually swapped it with Guadalupe Creole audio from Manu's voice, which was a decision that we made in the end because of what we talked about earlier about cultural preservation and language.

Manu's language, I think it's spoken by just under half a million people. You can correct me on the number Manu, but it's a very specific use case that even when we shared it, people came to Manu and said, hey, like there's someone speaking Guadalupe Creole in this opening film, you should collaborate with them.

And again, it just speaks to the importance of some of these opportunities that we get as creative is to be on stage and to really highlight a culture or to learn about other cultures.

So again, the narration allowed us to place or to fit into place what we wanted the story to be. So obviously we had a bit of an idea of how it was gonna start, how it was gonna end, what it was gonna be about, but really the magic of Sora and how I encourage everyone to leverage it is almost like a pottery wheel where you do have control and you have agency, but sometimes it will surprise you and will allow you to create something that you can riff off of.

So then I would say it's like one of the most powerful parts of Sora and we can dive a little bit deeper into that. But for now, I don't know if you have anything to add Manu.

Also Will, I just wanna say, don't feel rushed fellas. This is your time, we'll have plenty of time for Q&A, definitely get through the content that you wanna share with us all.

Perfect, and I see a question live in the chat from Ekaterina asking about why did we wanted to start with audio first? It was actually an idea from Will because a lot of the work that he's done in the past is in film came from doing exactly that.

Like when you start with audio, you're a little bit less, I think, biased by the first few visuals that you capture, whether it was a digital camera or using generative AI.

At the end of the day, your movie kind of shapes on itself as you start filming it. But if you already have an idea of a story and you play with the audio, you can touch the people from a different perspective.

And I have synesthesia, for me, sound is something very important in my way that I approach art and I think about art.

And coming up with the voiceover also gave us the different portions of the film.

And like, how long should we spend talking about this part? Or how long should we spend talking about this other part? And it was easier to then add visuals to that using AI literally like a digital camera to shoot the different things that we had in vision, as opposed to doing the opposite and trying to find those perfect visuals and then try to make it fit together.

So I think it's a much more natural and traditional approach. And finally, this last line on the screen was about the looping journey. When you will watch the short film, you'll see that the beginning kind of connects very well to the last frame. And that's a thing that we were able to achieve thanks to one of the Sora features, which was Remix.

And with Remix, you can use a clip that already is generated from a prompt. And at the time we didn't have image input. So it was really a lot of prompt engineering, writing the right prompts, trial and errors. We did about a thousand generation, but we only kept 27 at the end.

But that clip that we started with, it was important for us to reuse it at the end because of that reincarnation and looping journey we wanted to tell.

So using the Remix feature, we were able to change the age of a character, but keep the same closing, the same composition in the scene, the same lighting and so on, which helped us create this effect that was really interesting.

I see a question from Kush that's really good. I'm gonna save it for the next slide, which I think talks a little bit about AI visualization.

Perfect. So in terms of, again, Manu just mentioned we went over a dozen generations and again, a lot of that number has to do with what I was mentioning earlier, that sometimes you're looking for something specific and Sora will surprise you and you will go in this sort of tangent to explore something totally different or sort of suggested by the engine. And that itself is what I found very powerful about Sora compared to other models is the ability to blend things together, remix things together.

So in this case, Protopia was actually born out of the need to showcase blend and remix. Two features at a time were in Alpha Face and they were opening up with publishing the paper and showing these features. So in about a second, I'll switch to my camera where I can show some of that in video context.

But for now, I'll also answer Kush's question, which is what limitations do you run into that you want the tech community to focus more on? So I would say definitely physics. It's something that Sora is doing really well.

But when I say physics, I mean more so like when you see someone walking, like how grounded they are.

I know it's something that's currently being worked on by a lot of models at the same time. But realistically, it worked in our favor for this theme because it's very ethereal, it's very slow, it's great.

And Mano and I are really good at working with, focusing on our strengths, which in this case was this sort of theme. But if we were to do something very intentional, what we need, again, I come from a 3D animation background. If I want something to interpolate in a specific way, obviously it's not there yet. We're not there as a technology as a whole, not just Sora, but as an entire, the state of generative video is just not there yet. So I would say like physics and maybe micro adjustments to movement, which I think it's coming. It's only a matter of time with how fast things are moving. But yeah, I would say that's my comment there. And if you have something to add Mano.

No, I think that's a very good point. Another limitation that we ran into was obviously the lack of image input at the time that we did the film.

Because again, it was very early stage for Sora. It was even the previous model and the goal was to showcase blended remix. But the fun part is that we got to be the first to try it and to give a lot of feedback to the team, which they prioritized and ended up implementing for the release. So now people have access to Sora if they pay for it, then they can actually use image input.

And that is fantastic because when you look at the short film that people can make right now, they can have character consistency, they can have stories that they can build from other AI tools.

And that creates more collaboration between artists and companies, which I think is super needed in the industry. So it's actually beautiful to see.

And at the same time, playing with limitation is a superpower too because then you have the sandbox that you're trying to play with.

And I think that give us a lot of opportunity at the same time for us.

Exactly, some part of cultural validation. So obviously when you tell stories that are from specific cultures, it's a little bit like, we call it this funny term called the digital blackface. There's this question asking yourself, should you be generating visuals from a culture that's not yours?

I think art is very open in that, it's not us to judge, but we felt like as we were talking about our own culture, it was important that you represent it in a very good way.

So we did a lot of user testing, even though we didn't have a chance to really be able to talk about Sora to a lot of people, we were under NDA, but we were able to do it.

able to include some people in the pipeline, thanks to the OpenAI team. And we tried to actually get a lot of feedback early on to make sure that even my voice that I was using, the scripting, the accent that I was using was accurate to people's ears, or that the visuals that we represented and a lot of symbolism was not trying to depict something in a way that's actually biased.

And I would say I was actually surprised, because in very, very early days of Dali and ChatGPT and Sora, you always have this fear that there might be some part of the training that has not been really strong on some aspects of the world. But I was actually surprised to see that it was actually better than my expectation. And as we continue to provide feedback, it just kept getting better and better and better. It's still not perfect. And we're talking about generative AI here. So obviously, there are limitations. And you have to play the kind of Russian roulette game and continue to try to get to the point of what you're trying to achieve. But overall, we were really satisfied with the result. And the result and the way that people reacted to the film when it came out just proved to us that it resonated with them. So it was important that we had this seal of validation with our community before we just go ahead and continue to share it.

And what I was going to add to that, Manu, is I think at the core of our project of Protopika, we always looked at this as an opportunity to create a blueprint for what it would look like to have someone who has a technical background, whether it's with generative AI or 3D animation, whatever it is. It's a very visually fluent person or professional, combined with someone or an organization that has a cultural knowledge.

So essentially, again, there's cultures around the world that specifically focus on oral storytelling. They don't document it in a written format because that's part of the tradition and the culture. And a lot of the times, it's hard to even come across those stories unless you meet someone from that culture specifically. So one of the things that we've been focusing on is in collaborating with different communities. In this case, in Canada, the indigenous community has reached out to us. And we've had conversations with them about signals and the exhibitions that we've done. And it really shows that this is a universal need for a lot of cultures to be able to not just be contained within a specific format, whether it's in museums or traditional arts, but really opening that up to storytelling at a more global.

And the way I see it, and Manu and I see it, is AI and machine learning is this sort of blanket that is going on top of what we call the fabric of society. But it's really about how it enters specific, fine grooves. So in those grooves, there's smaller communities, smaller stories to be told, or more. Yeah, they're not necessarily at the surface level. So it's really exciting to us to have this sort of interaction at events. And experiential is a big component of that. Yeah, I'll leave it at that for now.

Very well said. And the last thing is, yeah, we actually had some pregnant, very, very intense feedback, positive, from people that have tried the exhibition that we made out of the short film that was with this EEG headset. And at Vancouver International Film Festival, this was this lady that was an indigenous Canadian and that actually told us about going through the experience was really something that changed her way of seeing AI and kind of healed her in a certain way. So there is a part of even that story that I think touched other cultures outside of the one that we initially targeted and really resonated with diverse backgrounds. So it was something really positive and beautiful to witness.

Also, someone in the comments, Paul, asked if we used any other open AI tools. Correct me if I'm wrong with the question, but I think that's what you're asking outside of SOAR, like the LLM, VisionModel, DALI. One thing I can share is that some of the SOAR early features allowed us, even if we didn't get image input, to reference a DALI prompt. So we were able to use text to video, but through the process of text to video, we could do text to DALI and then DALI to video. And if you had some DALI images already generated, you could reuse them and try to add video to it and see how it goes. And because me and Will were part of the DALI 2 alpha, we had a lot of generation that we had saved in our folders and so on. So we tried a bunch of those things early on and realized that we were really surprised with some of those results. And we ended up choosing one or two that we ended up keeping for the short film. So when you see in the short film later, this kind of like whoosh transition going through a tunnel, those are some early prompts that Will were experimenting with. We didn't even think that we would have that in the film. And those are what we call those happy accidents. It's like literally Bob Ross, by mistake, the brush is dirty and leaving a trace on the canvas. At this point, it's too late to try to remove it. So you play around it and you use it as part of your visual. And I think it's a very cool metaphor to use for AI where it's a collaboration. You're working with that tool. You're not trying to replace yourself and you're not also trying to just take over and do everything and have full control. There is a part of letting go and accept this part of the collaboration. The same way that working with Will forced me to also let go of some things that maybe I wanted to have control over and vice versa. And that's the beauty of human collaboration. And by allowing yourself to think this way with AI, you will be surprised with the result that you get. So that's kind of one of my beautiful takeaway of having those limitation.

Yeah, well said. And finally, execution. So in terms of how we executed, again, a lot came down to editing. We edited this in DaVinci Resolve, but honestly, it doesn't matter really what the preference of editing is for software-wise. But what I did find, and maybe this could go back to Kush's question about what could be improved, is again, there's a lot of limitations with the output of video models that I've seen a lot of improvements since the last version of Sora. But at the time, we made this, again, with the first early version, which the fidelity, I think, was like 720p. We upscaled it using Topaz and other tools in AI, but now I believe Sora has a much higher fidelity since it went public. So again, I encourage you to try it, to give it a full overview of what's possible now. But again, it's really for us at the time was leaning into some of the things that we wanted to do without sacrificing the quality and fidelity. So you might notice that some of it looks slightly different from other posts on Sora, and it's because, again, it's made with a very early version of Sora.

Also, Will is super humble, but a huge part of why I think this short film came out very well together was the audio VFX and the added kind of like reinforcing the style that we already generated from the visual. So because we knew we were restricted with the resolution, we wanted to also talk about cultural preservation. So there was this kind of like perfect analogy of like archival data and visuals and grainy films. So we use a lot of those texts and words in the prompting to be able to get an output from the video that was actually purposefully looking deteriorated as if we kind of like revived it and reuse some old film camera and so on. So using that didn't block us because even in 720p, that's actually a desired look that you wanna have, but then putting it all together and doing the editing on software, visual and adding sound effects really kind of put everything back together. And Will did a fantastic job because we were really late on the deadline at the end and time was running out and he was able to like do quick edits and we worked really well together when we worked on short term line. So he ended up giving us this result.

And also to answer Stefan's question, we use FigJam for the whiteboarding part that we mentioned earlier. That was really kind of our Bible. And to this day, we still have the file open and all of the content, the research that we did on traditional culture elements and the AI, some of the visuals that we didn't use out there as well. So it's actually so cool to build this presentation we're giving you guys right now, we ended up going back to it and it revived a lot of positive memories.

So with that, I think maybe you can switch to sharing my, I don't know if you want to pin my screen Manu to show some of the, oh, actually, well, if you want to go back to that slide, or actually we can come back to it. We'll come back to it after, yeah. Yeah. So I have here, again, I invite you to watch the film via the link that we'll share on the chat, but I did want to share specifically, again, we can't share audio, but we wanted to show you how the blend feature in this case works with Sora, which is beautiful. In this case here, this is one shot and this shot turns into another. So this blend, if you see the butterfly disappearing, go into a tunnel. This is what the blend feature does. It connects shot A, which in this case is a shot and shot B, which is a shot going through the tree. So you can't really tell, but Sora just does it so beautifully from the beginning. And some of the things that happen are easy for the eye to just be deceived. So for example- Even right now, that was a very quick one, but you had this kind of like very minimalistic coral biomimicry, like microscopic view that was transitioned from a supernova kind of galaxy looking in the space. And like the relationship between those branches and murals and neural networks with like the coral shape that is evolving.

Similar to like thunder or like roots. And those two shots were also blended together. Exactly. And there's another example where we had a shot here, a cheetah, and then we transitioned to a wolf. And again, this is all Sora going from this shot, from shot A to shot B in this case, using blend. And this was about a year ago. So you can imagine how much it has improved in the span of a year as well.

There's something very cultural to say about that feature as well, is that in Caribbean culture, we have this concept of a folkloric animal called the Mufras, and that's like a monster that is like a human shapeshifter. And so it was very important in the story that we try to represent that, but no one had truly had released any Guadalupean movie or sci-fi film from that folklore yet in the world. So we don't have example of what that looks like besides my grandmother's telling me stories at night. And so it was really fun to play with Sora and try to depict what it would look like. And I ended up having a chance to show her the video when I came back in Guadalupe last year. She is paralyzed right now. She doesn't talk a lot, but I get a smile from her when she watched it, and that was the world to me. So I think we did a good job. But again, that's the blend feature at play. Like we did not design that. We only provided those two visuals and then a prompt on how we think they should connect, but a lot of trials and error to get it, but it was beautiful.

So again, Sora works really well with, yeah, we prompted a snake, like propping up, and then we use Remix to change the material of the snake to this crystal material. So it's something that, again, coming from a VFX 3D background, not very easy to do in this timeline. Not impossible to do in 3D, but not nearly as quick as Sora can do it.

And here's another example of going from, again, animal to human, back to human, back to animal, and so on. So a lot of this was with the editing, but the blend feature still remains. And here's just a little example of what I was mentioning earlier about like physics, maybe this very, very early version of Sora, which again has been improved since then. Things kind of feeling kind of floaty, grounded in the physics and reality that you would find in like an action film or something. So we really use that to our advantage here. And again, when you watch it with audio and video, it really comes through as this ethereal.

Yeah. I think we'll let them have a chance to watch them by themselves and transition to some Q&A. But right before we do so, I just wanted to, first of all, thank you for listening to us and sharing our stories. And this is the one link that, if you want to keep in touch with us, is probably the best way to reach out to us and send us emails or follow up with whatever we have next going on with us, including when we do talks like these ones, we also repost. So don't hesitate to support us there. It's free. It's not a paid Patreon. We really just share with our community what we are doing.

Oh man. Well, Manu, Will, I think you had a real impact tonight. We'll see more of Will and Manu. They are also multilingual and we're in conversations about potentially doing more of a how-to with Sora in Spanish and in French. So that would be super rad.

We have a few really amazing events in the pipeline as well. We're live streaming Music is Math next week. So fingers crossed it all goes well. It's incredibly complex and technical. And Caitlin and I are telling our team that we're pretending for a night that we're a theater, but we're not. So we're winging it. But Music is Math is an exploration of sound, science, and creativity. And we have two performances. One is the mother of robots, Carol Riley, and she's the founder of Deep Music AI, but she's also a computer science professor at Stanford. So she'll be collaborating with first chair violinist from the SF Symphony and other musicians. They're going to be using a Motive headset, which Will, I know you've talked about, you've been experimenting with that as well. So it's hybrid. We have 80 people at OpenAI at our headquarters, but we're live streaming it for not only the community, but the public. So please share that link. I think it's going to be really rad.

And then we also have an AI economics in-person event. That one is invite only. It's specifically for economists. So if you work in industry, you're an economist, whether it's industry or academic, let us know. That one's two weeks away. It's almost completely full, but if you're interested and you feel like you have something meaningful to contribute, let me know.

And then let's see, what else do we have in the pipeline? Oh, we launched the Paris chapter of the OpenAI forum. And the very first event that we're going to host is Ask Mona, which is an AI startup, also very much focused on cultural preservation. So they are actually, they've created an app where they're training it on cultural data. So pieces of art and historical cultural artifacts that you would find in a museum or an art center. And you can ask the app questions. So for in Paris, you could ask about the Mona Lisa. Like where was it created? In what timeframe? Why is it culturally relevant? What style was it painted in? Who is the painter? So we're going to be hosting Ask Mona in the Paris chapter. So like I said, we're really focused on this theme of human creativity because we believe that there's so much question behind it and we want to demystify it. And we're so excited that we got to host Mono and Will tonight, and it's just the beginning folks.

So please jump into the networking session, get to know each other. As you can tell, we actually really do know our members and we want our members to know each other. Happy Wednesday guys. I was so tired coming into this after a long day of work but now I don't think I can sleep because I'm so excited and really, really honored to have had this meeting with you guys. Thank you so much, Will. Thanks for having us.

Thanks for having us too. Thank you.